ClickHouse Stand-alone 虽然搭建简单,但存在单点问题,生产环境不建议使用。ClickHouse 提供了数据副本集群模式,我们可以通过增加数据副本的方式,来提高服务的可用性。本文记录 1S-2R (一个分片两个副本)的高可用集群搭建,以及常用操作

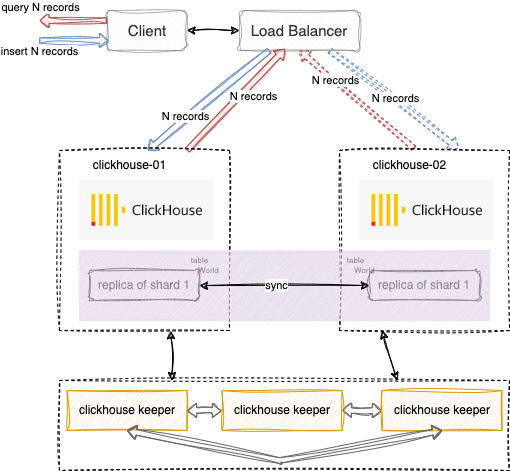

ClickHouse 1S-2R 如下架构图描述

Modify Xml File 详细配置信息参考官方文档 Replication for fault tolerance

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 # modify config.xml | clickhouse-01 和 clickhouse-02 的通用配置 <remote_servers > <cluster_1S_2R> <shard> <internal_replication>true</internal_replication> <replica> <host>clickhouse-01</host> <port>9000</port> </replica> <replica> <host>clickhouse-02</host> <port>9000</port> </replica> </shard> </cluster_1S_2R> </remote_servers> <zookeeper> <node> <host>clickhouse-keeper-01</host> <port>9181</port> </node> <node> <host>clickhouse-keeper-02</host> <port>9181</port> </node> <node> <host>clickhouse-keeper-03</host> <port>9181</port> </node> </zookeeper> # clickhouse-01 和 clickhouse-02 config.xml 分别配置对应 macros <macros> <shard>01</shard> <replica>01</replica> # clickhouse-01 # <replica>02</replica> # clickhouse-02 <cluster>cluster_1S_2R</cluster> </macros> # modify keeper_config.xml <raft_configuration> <server> <id>1</id> <hostname>clickhouse-keeper-01</hostname> <port>9234</port> </server> <server> <id>2</id> <hostname>clickhouse-keeper-02</hostname> <port>9234</port> </server> <server> <id>3</id> <hostname>clickhouse-keeper-03</hostname> <port>9234</port> </server> </raft_configuration>

Up ClickHouse Server 通过 docker compose 创建 ClickHouse Server 1S-2R 集群

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 # docker-compose.yml contents version: '3.8' services: clickhouse-01: image: "clickhouse/clickhouse-server:${CHVER:-latest}" user: "101:101" container_name: clickhouse-01 hostname: clickhouse-01 networks: - cluster_1S_2R_ch_proxy volumes: - ./ck-01/config.d/config.xml:/etc/clickhouse-server/config.d/config.xml - ./ck-01/users.d/users.xml:/etc/clickhouse-server/users.d/users.xml - ./ck-01/data:/var/lib/clickhouse:rw - ./ck-01/logs:/var/log/clickhouse-server ports: - "127.0.0.1:18123:8123" - "127.0.0.1:19000:9000" depends_on: - clickhouse-keeper-01 - clickhouse-keeper-02 - clickhouse-keeper-03 clickhouse-02: image: "clickhouse/clickhouse-server:${CHVER:-latest}" user: "101:101" container_name: clickhouse-02 hostname: clickhouse-02 networks: - cluster_1S_2R_ch_proxy volumes: - ./ck-02/config.d/config.xml:/etc/clickhouse-server/config.d/config.xml - ./ck-02/users.d/users.xml:/etc/clickhouse-server/users.d/users.xml - ./ck-02/data:/var/lib/clickhouse:rw - ./ck-02/logs:/var/log/clickhouse-server ports: - "127.0.0.1:18124:8123" - "127.0.0.1:19001:9000" depends_on: - clickhouse-keeper-01 - clickhouse-keeper-02 - clickhouse-keeper-03 clickhouse-keeper-01: image: "clickhouse/clickhouse-keeper:${CHKVER:-latest-alpine}" user: "101:101" container_name: clickhouse-keeper-01 hostname: clickhouse-keeper-01 networks: - cluster_1S_2R_ch_proxy volumes: - ./ck-kp-01/config/keeper_config.xml:/etc/clickhouse-keeper/keeper_config.xml - ./ck-kp-01/config/coordination:/var/lib/clickhouse/coordination - ./ck-kp-01/config/log:/var/log/clickhouse-keeper ports: - "127.0.0.1:19181:9181" clickhouse-keeper-02: image: "clickhouse/clickhouse-keeper:${CHKVER:-latest-alpine}" user: "101:101" container_name: clickhouse-keeper-02 hostname: clickhouse-keeper-02 networks: - cluster_1S_2R_ch_proxy volumes: - ./ck-kp-02/config/keeper_config.xml:/etc/clickhouse-keeper/keeper_config.xml - ./ck-kp-02/config/coordination:/var/lib/clickhouse/coordination - ./ck-kp-02/config/log:/var/log/clickhouse-keeper ports: - "127.0.0.1:19182:9181" clickhouse-keeper-03: image: "clickhouse/clickhouse-keeper:${CHKVER:-latest-alpine}" user: "101:101" container_name: clickhouse-keeper-03 hostname: clickhouse-keeper-03 networks: - cluster_1S_2R_ch_proxy volumes: - ./ck-kp-03/config/keeper_config.xml:/etc/clickhouse-keeper/keeper_config.xml - ./ck-kp-03/config/coordination:/var/lib/clickhouse/coordination - ./ck-kp-03/config/log:/var/log/clickhouse-keeper ports: - "127.0.0.1:19183:9181" networks: cluster_1S_2R_ch_proxy: driver: bridge # up server docker compose -f "docker-compose.yml" up -d --build

Verify ClickHouse Keeper 验证 ClickHouse Keeper 是否启动成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ****@**** ~ % echo mntr | nc localhost 19181 zk_version v23.7.4.5-stable-bd2fcd445534e57cc5aa8c170cc25b7479b79c1c zk_avg_latency 3 zk_max_latency 71 zk_min_latency 0 zk_packets_received 325 zk_packets_sent 325 zk_num_alive_connections 3 zk_outstanding_requests 0 zk_server_state leader zk_znode_count 258 zk_watch_count 9 zk_ephemerals_count 6 zk_approximate_data_size 82531 zk_key_arena_size 28672 zk_latest_snapshot_size 0 zk_open_file_descriptor_count 62 zk_max_file_descriptor_count 18446744073709551615 zk_followers 2 zk_synced_followers 2

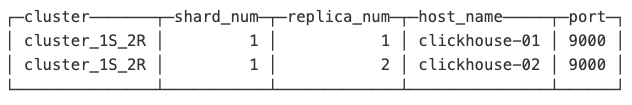

Cluster Info 查看集群信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 # login clickhouse server docker exec -it clickhouse-03 clickhouse client --user default --password 123456 # show cluster infos SELECT cluster, shard_num, replica_num, host_name, port FROM system.clusters WHERE cluster = 'cluster_1S_2R' ORDER BY shard_num ASC, replica_num ASC

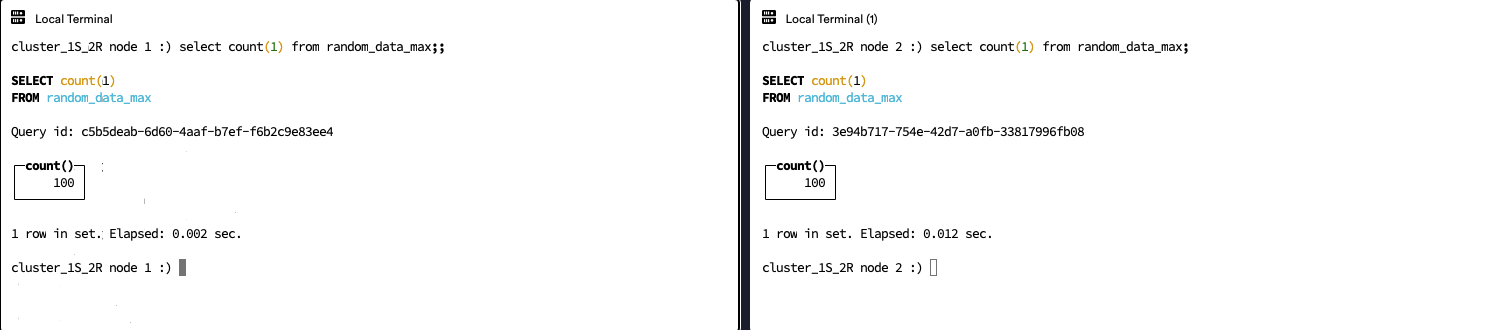

Data Sample 数据的简单测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 # db CREATE DATABASE dp; # table use dp; CREATE TABLE random_data_max ( date String, val UInt8, timestamp Datetime64 )ENGINE=ReplicatedMergeTree() PARTITION by toYYYYMMDD(timestamp) ORDER BY timestamp; # data insert INSERT INTO random_data_max(date, val,timestamp) SELECT today(), number,now() FROM numbers(100);

可以看到 clickhouse-01 和 clickhouse-02 的数据是一致的

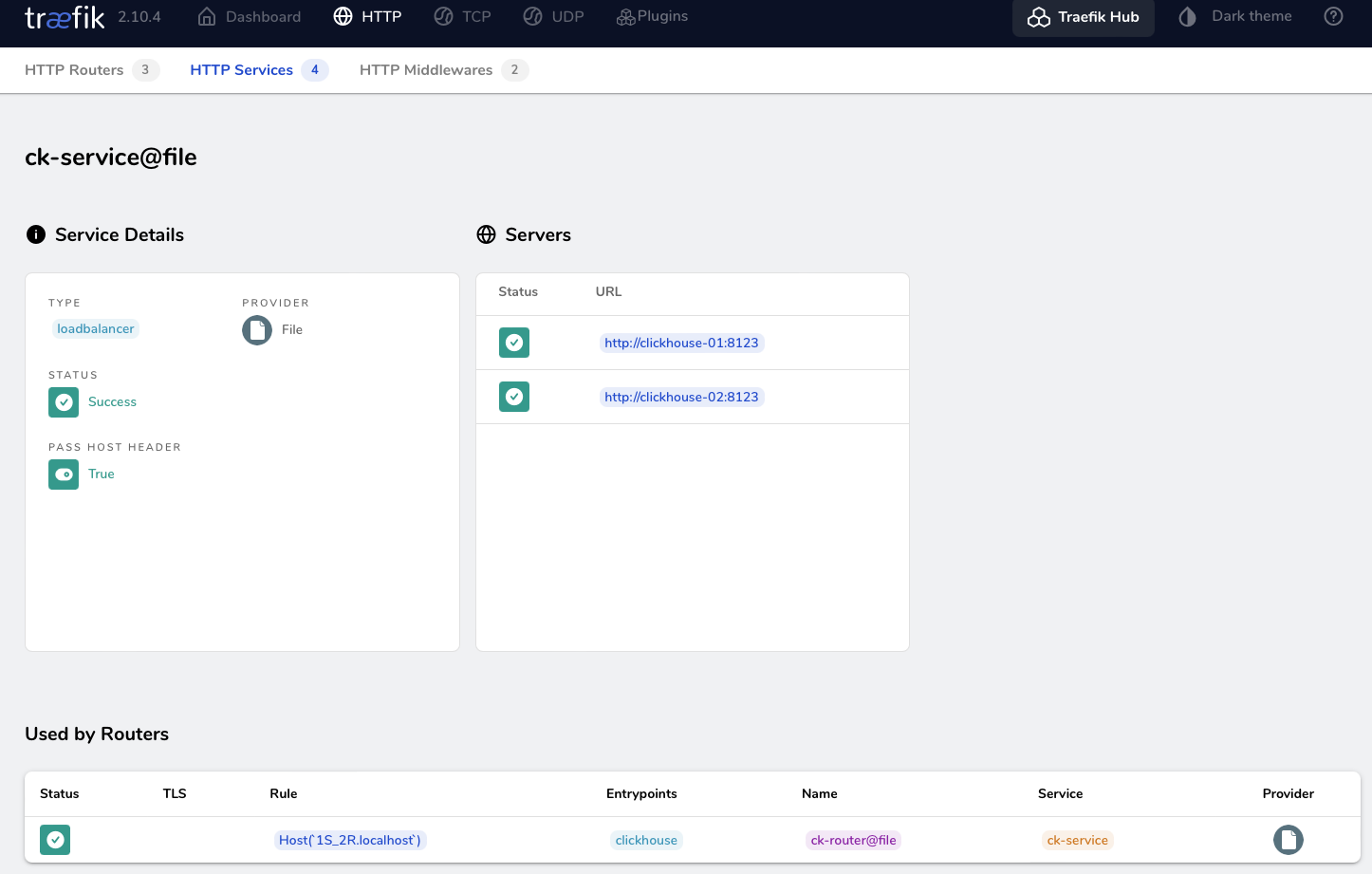

Traefik Traefik 可以对后端服务提供反向代理,支持 HTTP / TCP Proxy。这里我们使用 Traefik 控制访问 clickhouse-01 和 clickhouse-02 的负载均衡(HTTP PORT 8123)

Modify YML Config 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 # traefik.yml contents providers: file: filename: "/tmp/dynamic.yml" watch: true api: insecure: true entryPoints: clickhouse: address: ":8123" # dynamic.yml contents http: routers: ck-router: entryPoints: - "clickhouse" rule: "Host(`1S_2R.localhost`)" service: ck-service services: ck-service: loadBalancer: servers: - url: "http://clickhouse-01:8123" - url: "http://clickhouse-02:8123" # OR server with weights http: routers: ck-router: entryPoints: - "clickhouse" rule: "Host(`1S_2R.localhost`)" service: ck-service services: ck-service: weighted: services: - name: ck-01 weight: 3 - name: ck-02 weight: 1 ck-01: loadBalancer: servers: - url: "http://clickhouse-01:8123" ck-02: loadBalancer: servers: - url: "http://clickhouse-02:8123"

rule 中如果定义的 Host 是非 .localhost 结尾的,则需要在本地的 hosts 文件中指定到 127.0.0.1 的映射

Up Traefik Server 1 2 3 4 5 6 7 8 9 10 11 # docker-compose.yml add traefik service ck-proxy: image: traefik:v2.10 ports: - "8080:8080" - "8123:8123" networks: - cluster_1S_2R_ch_proxy volumes: - ./traefik/traefik.yml:/etc/traefik/traefik.yml - ./traefik/config/dynamic.yml:/tmp/dynamic.yml

浏览器访问 http://localhost:8080/dashboard/

Load Balancer Test 测试是否经过 Traefik 反向代理

1 2 3 4 ****@**** ~ % echo 'SELECT DISTINCT hostName() FROM cluster('cluster_1S_2R', system.tables)' | curl 'http://default:123456@1S_2R.localhost:8123' --data-binary @- clickhouse-01 ****@**** ~ % echo 'SELECT DISTINCT hostName() FROM cluster('cluster_1S_2R', system.tables)' | curl 'http://default:123456@1S_2R.localhost:8123' --data-binary @- clickhouse-02

可以看到 Traefik 对访问的 cluster_1S_2R 进行了负载均衡